Introduction

Background & Motivation

Surface electromyography (sEMG) has emerged as a promising approach for finger gesture recognition in human-machine interface applications, particularly where visual imaging is impractical. However, EMG is highly susceptible to movement artifacts, individual motor variability, and changes in hand position, making gesture recognition challenging during dynamic hand movements.

Research Challenge

Most existing studies focus on controlled, static hand positions, limiting real-world applicability. The primary challenges include sensitivity to mechanical artifacts, dependence on electrode positioning, and overlapping sEMG patterns that complicate accurate differentiation between finger positions. Models trained only on fixed positions have limited predictive power when hands move freely.

Our Innovation

This study integrates a soft wearable sEMG sensor, Video-Vision-Transform model, and motion sensor-based training to enhance finger joint angle prediction and gesture recognition across both static and dynamic hand positions. We achieve recognition accuracy of up to 0.85 for static and up to 0.87 for dynamic conditions in high-performing participants (N=16).

Clinical Relevance

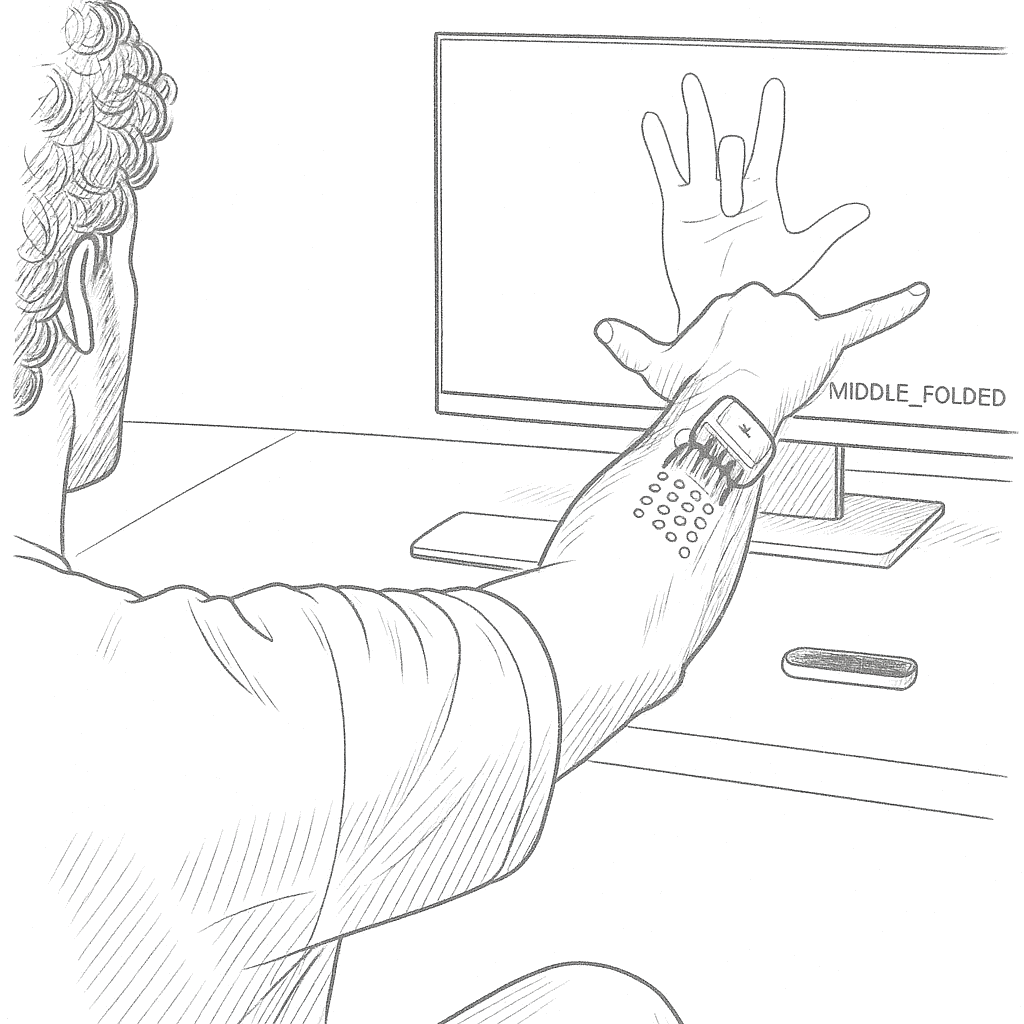

Accurate finger gesture recognition using soft wearable EMG sensors has significant implications for neurorehabilitation and assistive technologies, enabling enhanced motor function assessment, personalized rehabilitation, and improved prosthetic control for neuromuscular disorders and stroke. Because these flexible sensors can be worn comfortably outside controlled laboratory settings, they also make it possible to collect data "in the wild," yielding ecologically valid datasets that reflect users' everyday motor behavior without the need for a dedicated lab.

Research Pipeline

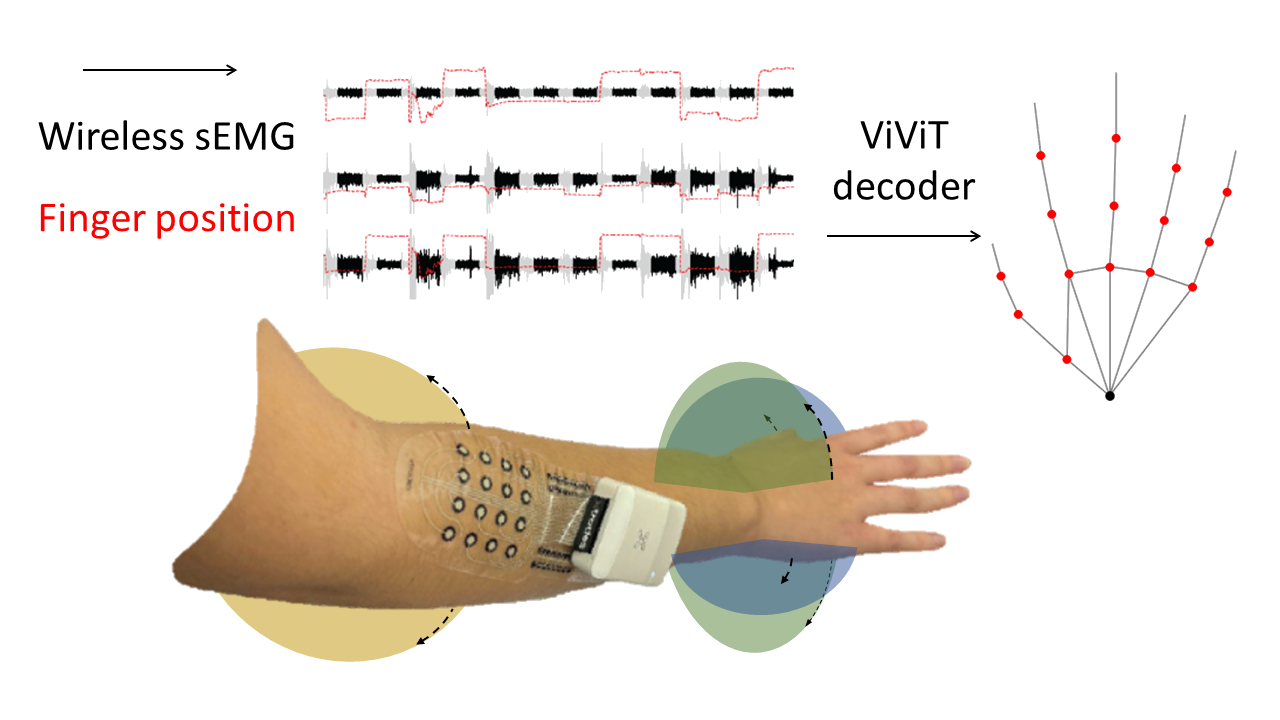

Figure 1: Pipeline from EMG acquisition to hand kinematic model

Key Objectives

- • Develop EMG-based finger gesture recognition for dynamic hand positions

- • Integrate Video-Vision-Transform (ViViT) model with motion sensor-based training

- • Address individual motor variability and movement artifacts in real-world scenarios

- • Advance toward more practical HMI applications in prosthetics and assistive technology

Methods

Experimental Protocol

Participants & Setup

- • N=16 healthy participants (ages 21-30)

- • Informed consent and ethical approval obtained

- • Right-handed participants with no hand injuries

- • Controlled laboratory environment

- • Standardized electrode placement protocol

Experimental Conditions

- • Static condition: three hand position fixed

- • Dynamic condition: natural hand movement

Gesture Tasks

- • Hold duration: 5 seconds per gesture

- • Rest interval: 3 seconds between gestures

Data Collection Protocol

- • 16 healthy subjects (ages 21-30)

- • 14 distinct finger gestures, 7 repetitions each

- • 5s gesture hold, 3s rest period

- • Electrode placement on extensor digitorum muscle

- • Tel Aviv University Ethics Board approved (No. 0004877-3)

- • Synchronized sEMG and Leap Motion recording

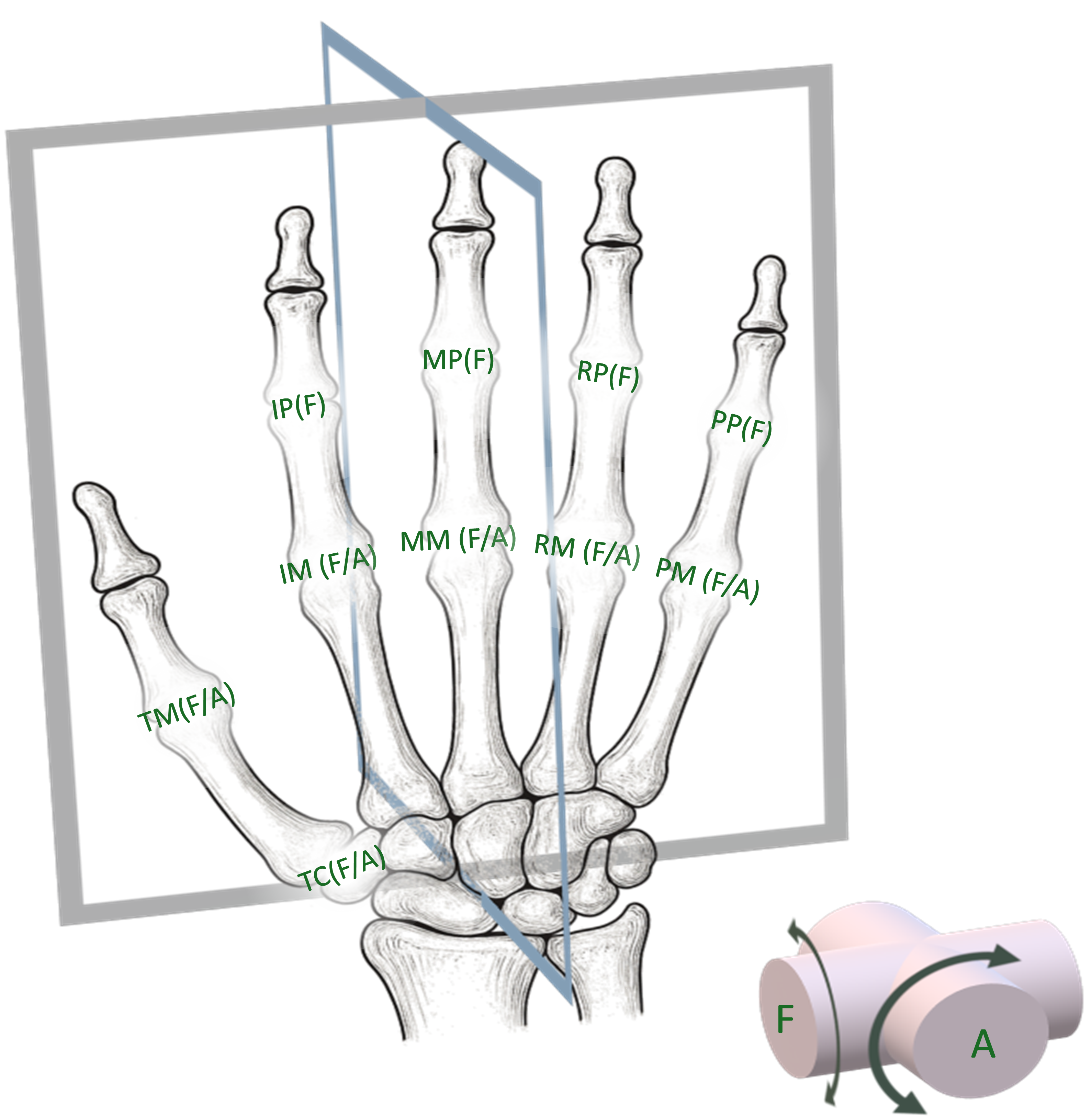

Hand Anatomy and Gesture Reference

🖐️ Hand Joint Angles & Gesture Classification 🖐️

📐 Joint Angle Measurements

Finger joint angles and measurement methodology

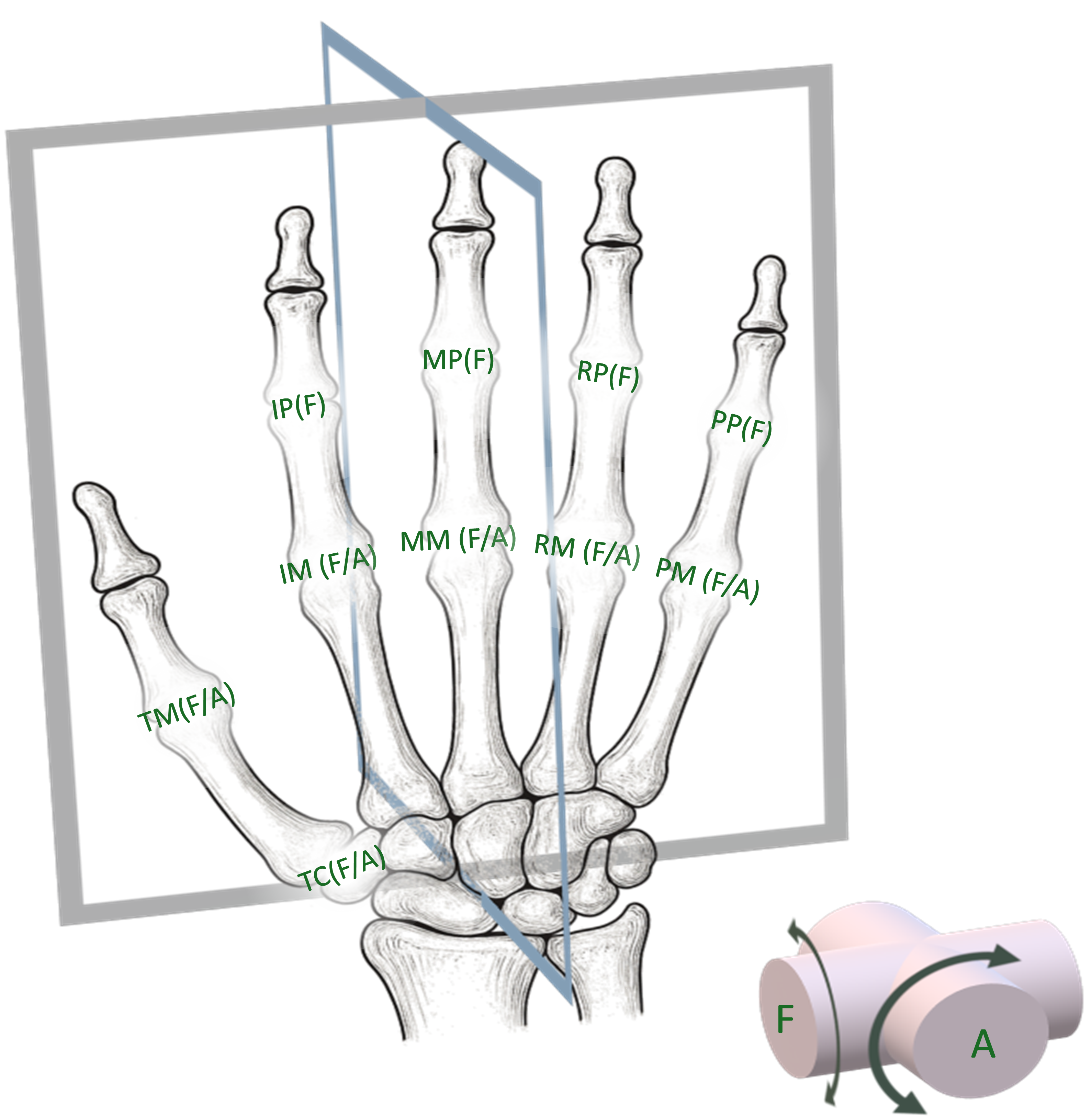

Figure: Hand anatomy showing joint angle measurements - (F) flexion or (A) abduction for each finger segment

✋ Gesture Classification

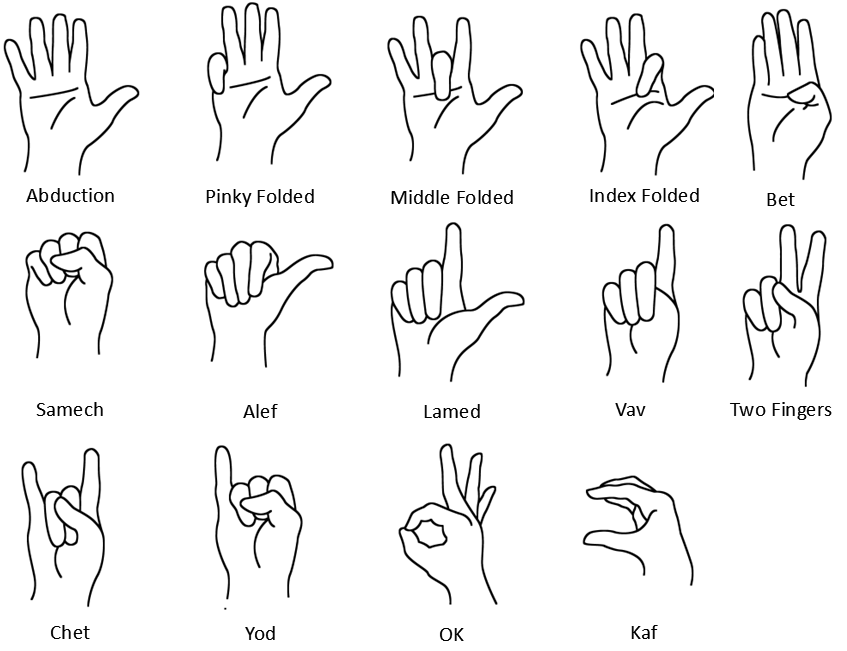

14 distinct finger gestures used in the study

Figure: Complete set of 14 hand gestures with Hebrew letter names and descriptions

🎯 Measurement Protocol

📐 Joint Angles:

Flexion or abduction measurements for precise finger tracking

✋ Gesture Set:

14 distinct gestures covering various finger configurations

⏱️ Protocol:

5s hold duration, 3s rest, 7 repetitions per gesture

Equipment and Hardware

EMG Acquisition System and Soft Electrode Array

- • Dry carbon electrode arrays (X-trodes Inc.) - Reference

- • 16 electrodes (4.5 mm diameter) in 4×4 matrix

- • Flexible polyurethane (PU) film substrate

- • 16 unipolar channels, 500 S/s, 16-bit resolution

- • Integrated IMU (not used in current study)

- • 16-channel flexible printed electrode array

- • Conductive ink on flexible polymer substrate

- • Electrode diameter: 8mm, spacing: 15mm

- • Skin-safe adhesive interface

- • Conformable to forearm anatomy

- • Low contact impedance (<10kΩ at 1kHz)

Leap Motion System

- • Leap Motion Controller 2 (Ultraleap Hyperion) - Reference

- • 3D interactive zone: 110 cm range

- • 160° field of view

- • Tracks 27 distinct hand elements

- • Maximum frame rate: 120 fps

- • High IR sensitivity

Equipment Specifications

- • Multi-channel EMG amplification system

- • High-resolution video capture setup

- • Synchronized data acquisition protocols

- • Real-time processing capabilities

- • Standardized experimental protocols

Signal Processing Pipeline

Preprocessing

- • Signal filtering and noise reduction

- • Motion artifact removal

- • Signal normalization and calibration

- • Data quality assessment and validation

Feature Extraction

- • Sliding window: 512ms with 2ms overlap

- • Z-score normalization

Machine Learning Models

- • Video Vision Transformer + Extratrees classifier

- • CNN + LSTM + MLP

- • CNN

- • MLP

Validation Methods

- • Inter-subject variability analysis

- • Testing under different settings

Experimental Settings and Conditions

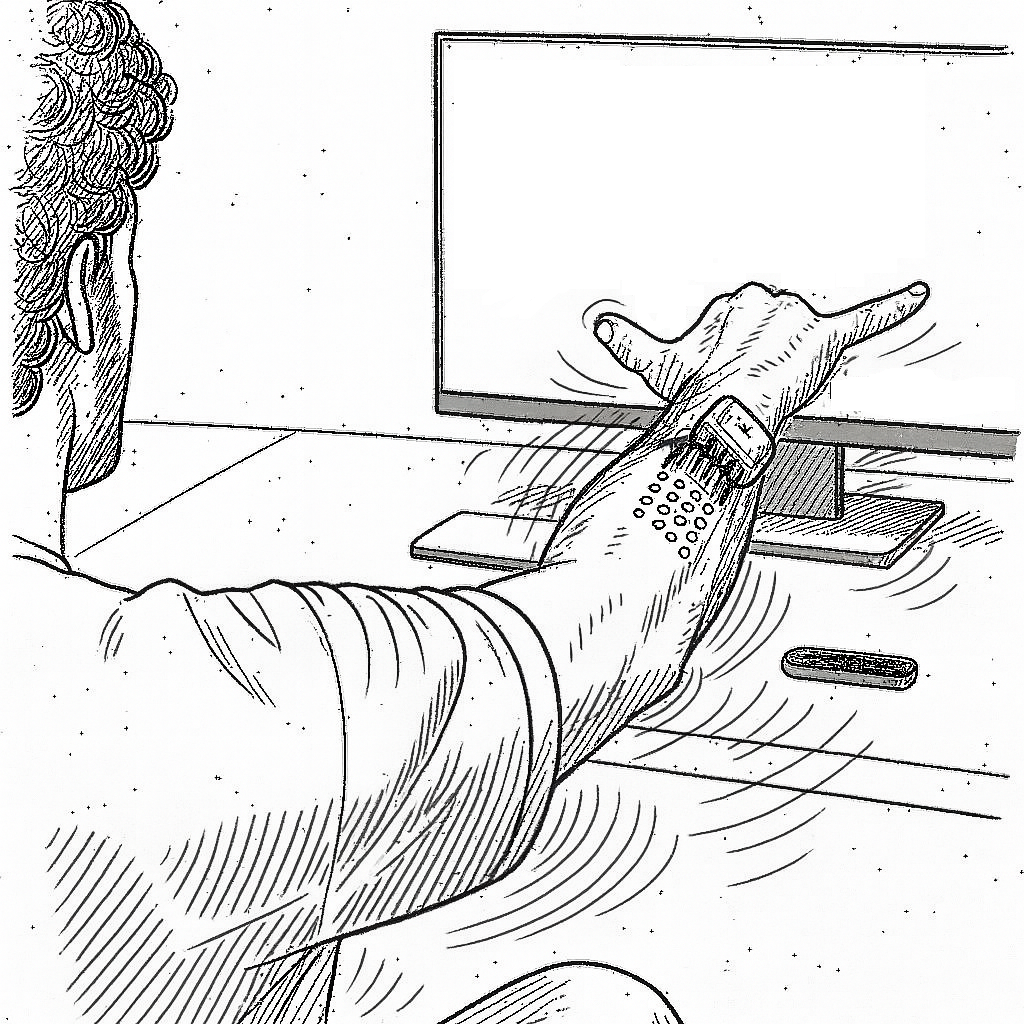

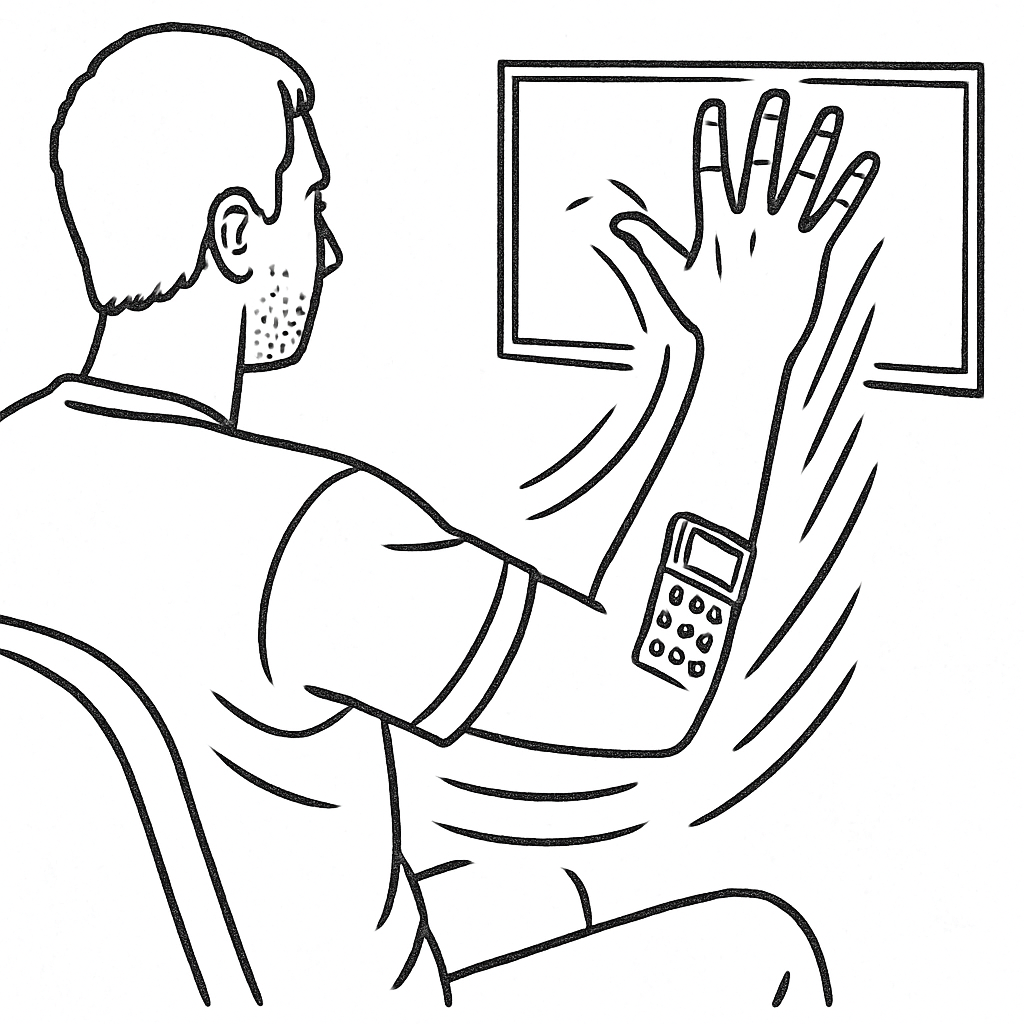

📊 Static vs Dynamic Experimental Setup 📊

✅ STATIC CONDITIONS (3 Conditions)

STATIC 1

Hand held down straight, muscles completely relaxed

STATIC 2

Hand extended 90° forward with palm relaxed

STATIC 3

Hand folded upwards with palm relaxed

🎬 DYNAMIC CONDITION (1 Condition with Movement)

Dynamic condition: Hand movement within camera detection range from each static position

🎯 Experimental Protocol Summary

✅ STATIC CONDITIONS:

Fixed hand positions, controlled gestures

🎬 DYNAMIC CONDITIONS:

Natural hand movement within detection range

Results

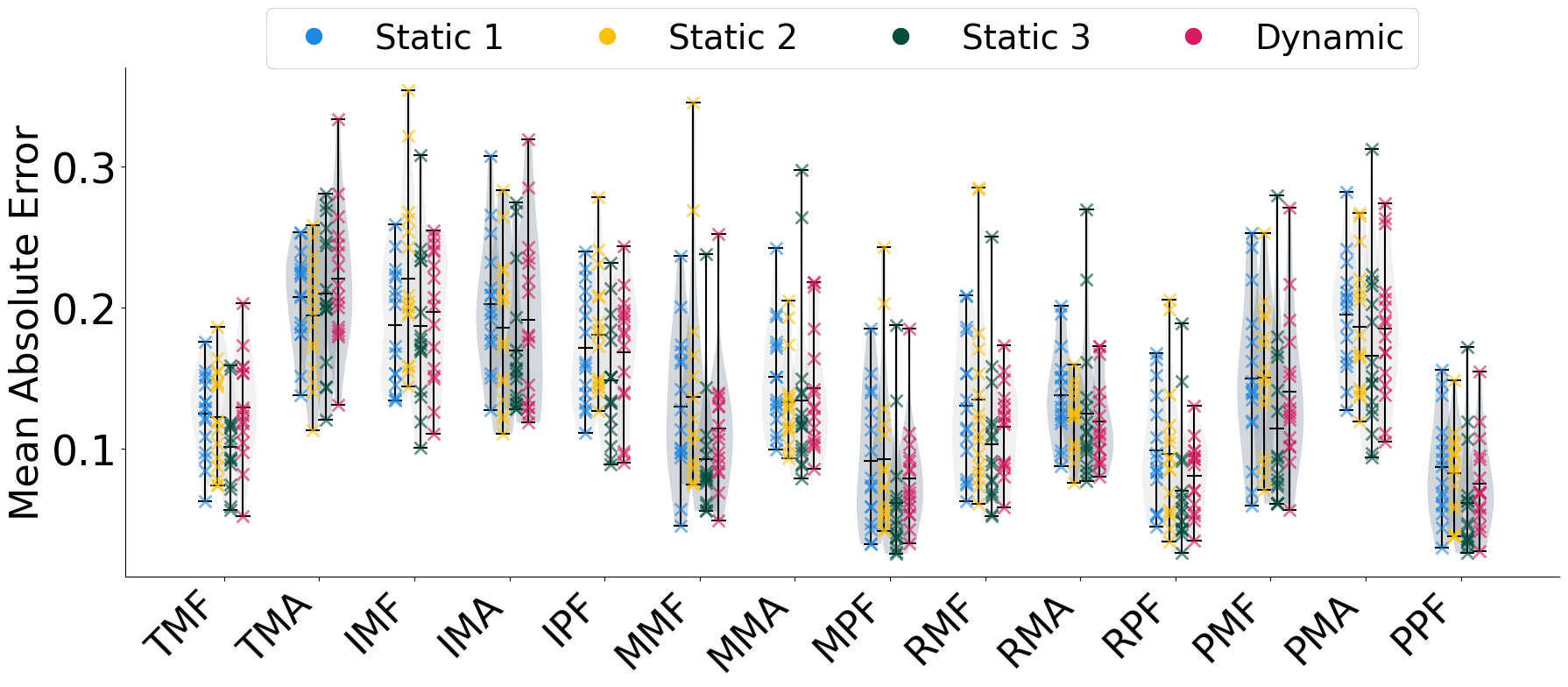

Finger Joint Angle Prediction

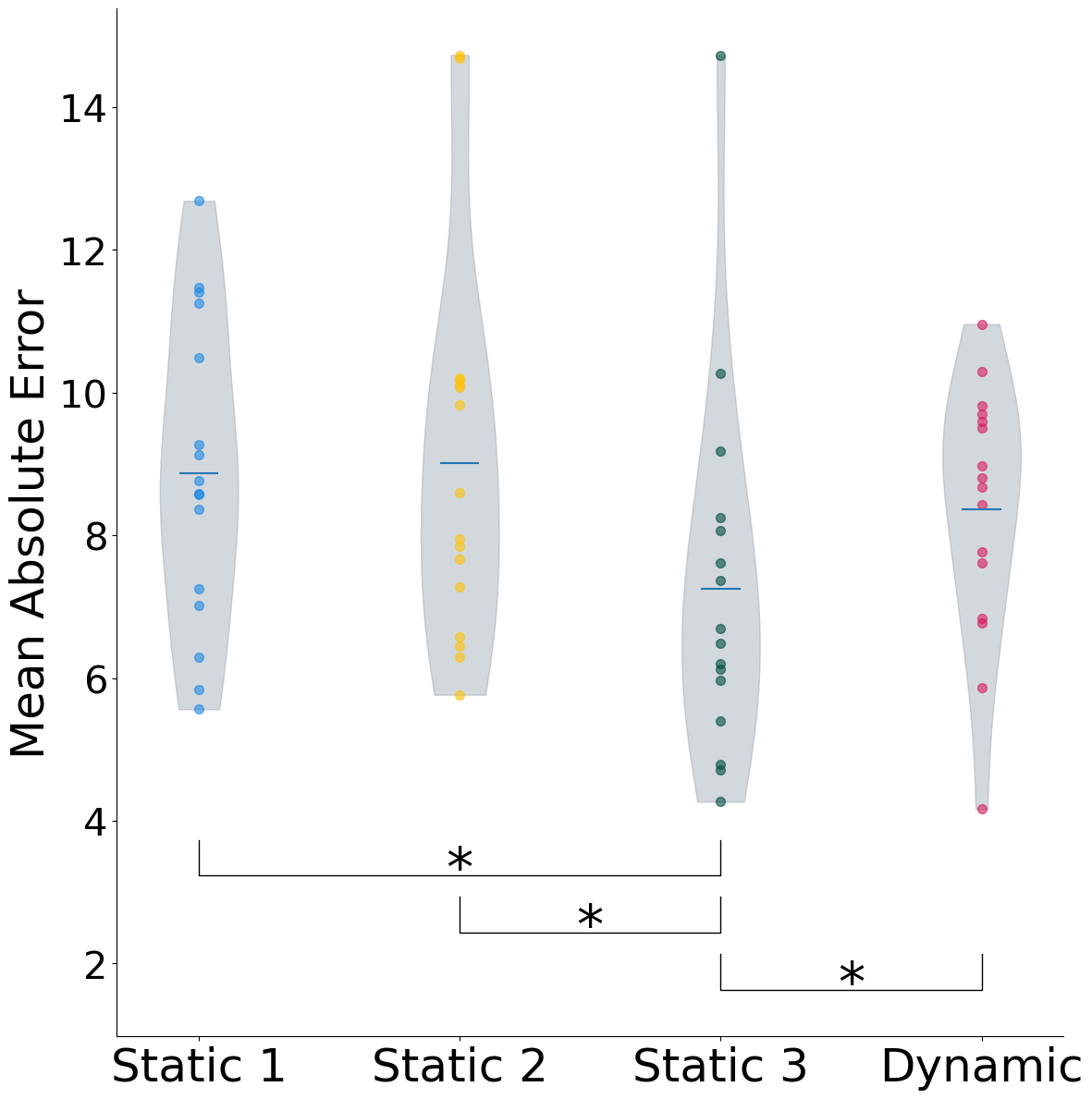

After model training, the ViViT model was used to transform input data into a 16-dimensional vector representing key hand kinematic landmarks (Fig 1). The model's outputs were validated by comparing predicted finger joint angles to motion sensor measurements. The mean absolute prediction error per joint is presented in Fig 2, while Fig 3 shows the error distribution across hand position settings.

Fig 1. Kinematic model with labeled joints.

Fig 2. Mean absolute error per joint.

Gesture Classification Performance

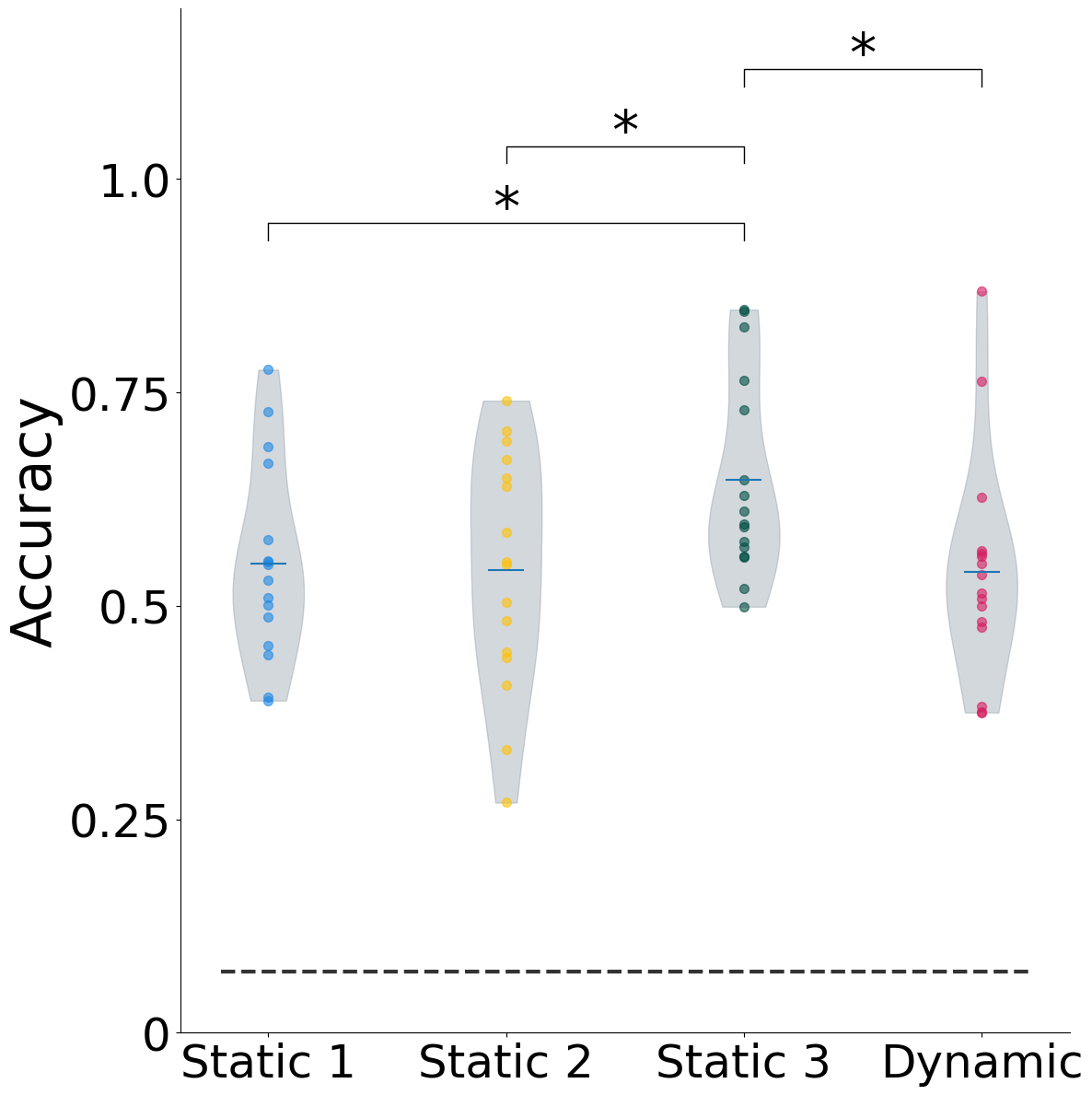

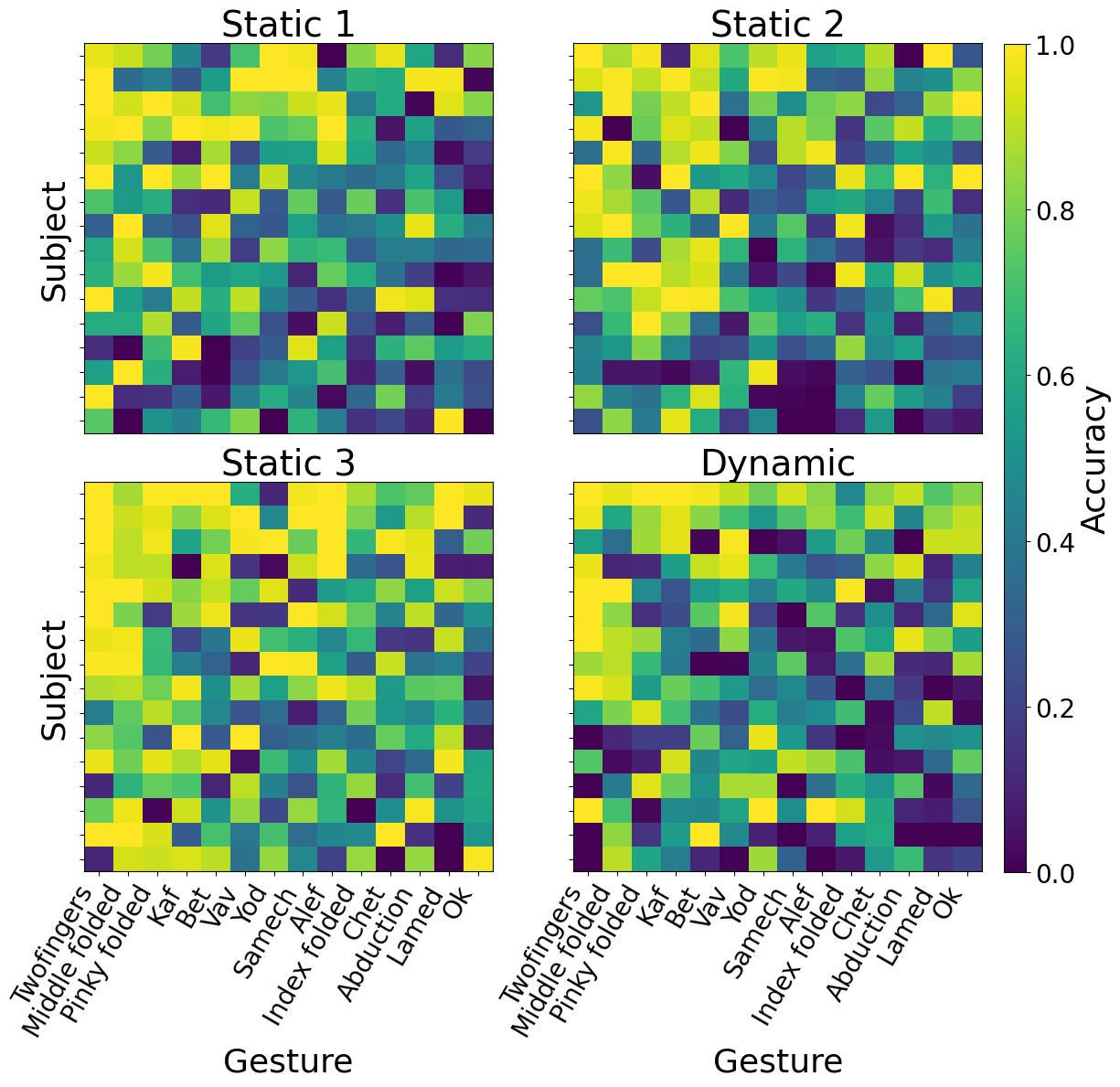

To further quantify the model's performance, we examined classification accuracy. A classifier was used to map the model's regression output into a discrete set of 14 gestures. The overall accuracy per setting is shown in Fig 4, while the heatmaps in Fig 5 reveal the detailed classification performance for each specific gesture across all subjects and conditions.

Fig 3. Mean absolute error per setting.

Fig 4. Gesture classification accuracy per setting.

Fig 5. Accuracy heatmap for each gesture across all subjects and conditions.

Model Comparison (Mean Accuracy ± SD)

Accuracy across different models with equal parameter counts for 14 gestures across 16 subjects.

| Setting | ViViT | CNN+LSTM | CNN | FC |

|---|---|---|---|---|

| Static 1 | 0.549 ± 0.114 | 0.39 ± 0.175 | 0.306 ± 0.145 | 0.186 ± 0.1 |

| Static 2 | 0.542 ± 0.139 | 0.437 ± 0.157 | 0.302 ± 0.124 | 0.226 ± 0.097 |

| Static 3 | 0.648 ± 0.117 | 0.478 ± 0.171 | 0.401 ± 0.117 | 0.282 ± 0.086 |

| Dynamic | 0.540 ± 0.131 | 0.357 ± 0.174 | 0.283 ± 0.096 | 0.218 ± 0.079 |

Analysis of Accuracy Variability

Inter-Subject Variability

Model accuracy varied significantly across subjects (from 50% to 90%), attributed to the natural, unconstrained data collection conditions.

- Behavioral Timing: Only a weak link was found between accuracy and deviation from ideal gesture timing (R² = 0.083).

- sEMG Features: Accuracy improved with more slope sign changes but decreased with higher zero crossings and greater central frequency variance.

Impact of Gesture Execution

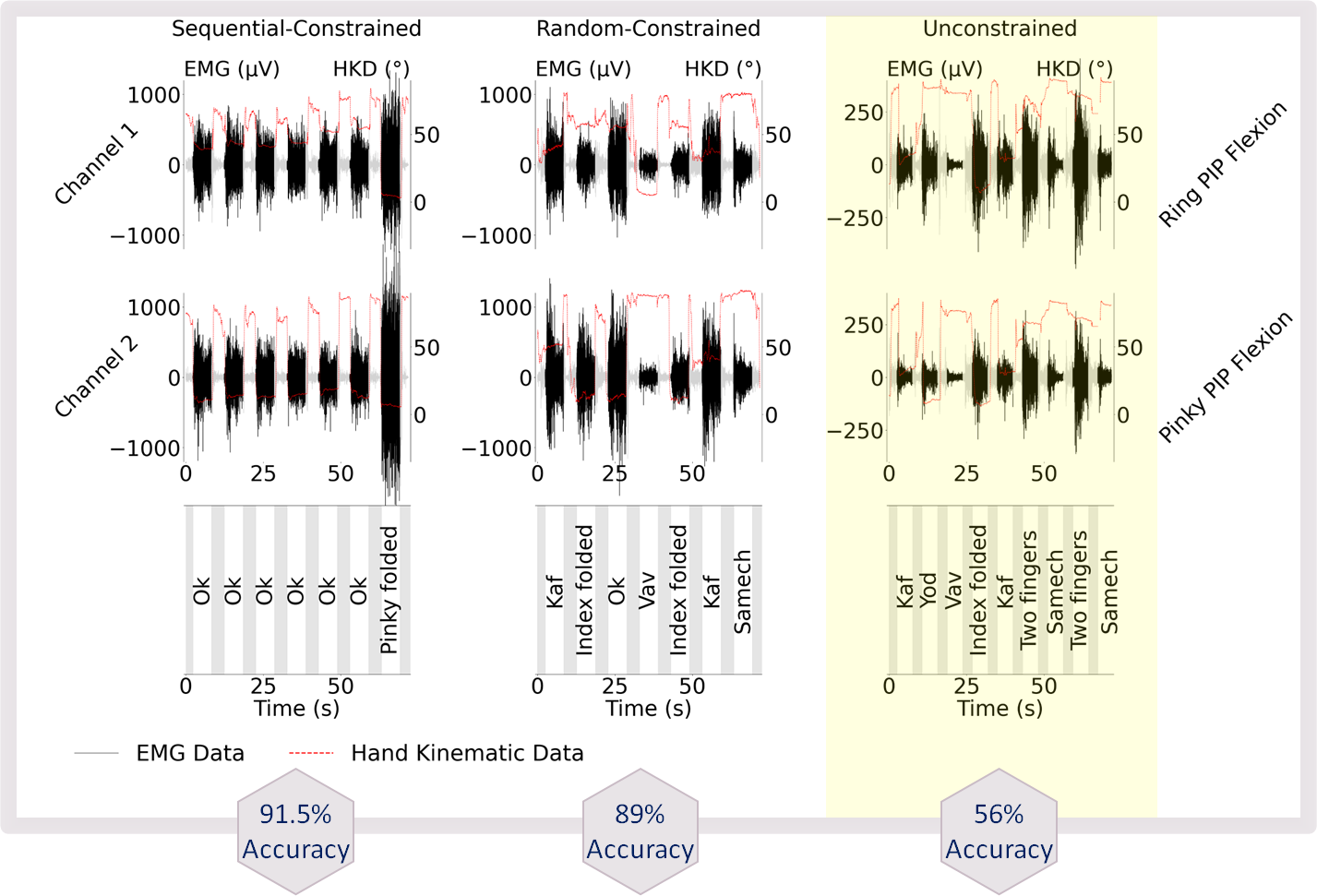

To examine this, a re-test was conducted with one subject, who was instructed to apply greater force and rest between repetitions. This guided protocol results in clearer, more distinct sEMG signals and significantly higher accuracy, as shown below.

Fig 6. Comparison of sEMG signals and resulting accuracy for unconstrained (right), random-constrained (middle), and sequential-constrained (left) gesture execution. The guided protocols lead to cleaner signals and higher accuracy.

Funding & Acknowledgments

Research Funding

This work was supported by the European Research Council (ERC), Grant Outer-Ret—101053186.

Acknowledgments

The authors thank Ofek Hava for help in data collection and Hila Man for many fruitful discussions. We also acknowledge the contributions of all study participants and the technical support team.

Conference Presentation

IEEE EMBC 2025

Title: Finger Joint Angle and Gesture Estimation Under Dynamic Hand Position Using a Soft Printed Electrode Array

Authors: Nitzan Luxembourg¹, Ieva Vėbraitė¹, Hava Siegelmann², Yael Hanein¹,³

¹ School of Electrical and Computer Engineering, Tel Aviv University, Tel Aviv, Israel

² UMass-Amherst College of Information and Computer Sciences, 140 Governors Dr., Amherst, MA 01003,USA

³ X-trodes, Herzelia, Israel

Conference: 47th Annual International Conference of the IEEE Engineering in Medicine and Biology Society

Contact & Collaboration

Research Contact

Lead Author: nitzan.luxembourg@mail.huji.ac.il

Principal Investigator: Prof. Hava Sigelman and Prof. Yael Hanein.

Collaboration Opportunities

Open to collaborations in EMG signal processing, wearable sensors, and clinical applications.